Overview

Generative AI testing tools are designed to evaluate, monitor, and validate the performance of AI models that create text, images, audio, or video. These tools ensure that AI-generated content is accurate, ethical, and aligned with business goals. They help detect biases, measure output quality, and maintain compliance with regulations. By automating testing workflows, these tools save time and improve reliability. Whether for large language models, generative design, or AI-driven creativity, testing tools are critical for building trust and accountability in AI systems. They empower developers, QA teams, and enterprises to scale AI adoption responsibly.

1. Lakera Guard

Lakera Guard specializes in prompt injection protection and testing for generative AI. It identifies vulnerabilities, ensures safe model outputs, and reduces risks of malicious prompts. It’s widely used to secure enterprise-grade AI applications.

2. PromptLayer

PromptLayer allows developers to track, monitor, and test AI prompts effectively. It provides version control and analytics to evaluate how different prompts perform. This helps in refining prompts for consistent and high-quality outputs.

3. DeepEval

DeepEval is an open-source testing framework for LLM applications. It enables users to create custom evaluation metrics, run test suites, and benchmark generative AI models. Developers use it to ensure reliability and performance.

4. TruLens

TruLens offers evaluation and monitoring tools for generative AI applications. It provides insights into AI outputs, detects errors, and measures trustworthiness. Its integration with LLM apps makes testing seamless and effective.

5. Arize AI

Arize AI provides observability and testing for machine learning and generative AI models. It helps monitor drift, bias, and quality issues. Teams use Arize to improve model performance and ensure responsible AI deployment.

6. Giskard

Giskard is an open-source tool for testing AI and LLM models. It identifies biases, safety issues, and risks in generative outputs. The platform supports collaborative testing, making it ideal for AI development teams.

7. Humanloop

Humanloop helps developers test and optimize prompts for large language models. It provides A/B testing, analytics, and fine-tuning support. This ensures more accurate and reliable generative AI results.

8. Robust Intelligence

Robust Intelligence focuses on stress-testing AI systems against vulnerabilities. It automatically detects weaknesses in generative models and prevents harmful outputs, making AI adoption safer for enterprises.

9. Weights & Biases (W&B)

Weights & Biases is a popular platform for tracking, testing, and evaluating AI experiments. It supports generative AI workflows, helping teams validate model outputs and improve consistency.

10. Promptfoo

Promptfoo is a framework for testing and evaluating generative AI prompts. It supports automated test cases, scoring, and result comparison, making it easier to optimize LLM-powered applications.

(FAQs)

1. Why are generative AI testing tools important?

They ensure AI outputs are accurate, ethical, and safe by detecting errors, biases, and vulnerabilities in generative systems.

2. Can these tools be used for all types of AI models?

Yes, many tools support text, image, and multimodal generative AI models, making them versatile across use cases.

3. Do developers need coding skills to use these tools?

Some tools are no-code or low-code, while others require technical knowledge. The choice depends on project needs and team expertise.

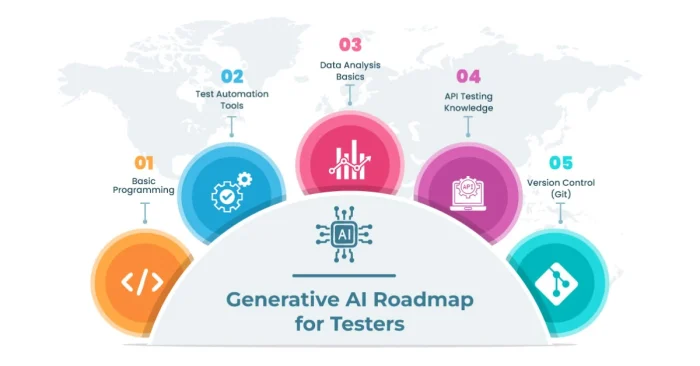

Learn More About AI Course https://buhave.com/courses/learn/ai